[ Updated Oct. 25, 2020, with a major rewrite. When I first wrote this article, things worked, but not well. After a week of additional research and work, I realized the answer was to add a 10G switch. This article was changed throughout to reflect this new hardware. ]

[ Updated Oct. 25, 2020, with a major rewrite. When I first wrote this article, things worked, but not well. After a week of additional research and work, I realized the answer was to add a 10G switch. This article was changed throughout to reflect this new hardware. ]

In this article, I will:

I’ve wanted to have the gear necessary to write this article for more than a year. I’m looking forward to sharing what I’ve learned.

NOTE: All this gear was purchased by me, in case you were interested.

EXECUTIVE SUMMARY

The absolute fastest and most consistently-performing storage you can have is that which is directly connected to your computer. No shared storage comes close. As well, the least expensive storage you can have, on a per user basis, is also that which is directly connected.

If high performance is your goal, the best option is to direct-connect an SSD RAID to your computer. Even a 4-drive RAID filled with spinning hard disk drives easily exceeds the overall speed of a 10G connection to a server when comparing both read and write speeds. Replace the HDDs with SSDs and direct-connect speeds blow any Ethernet-connected device out of the water.

If sharing files is more important than performance, the most cost-effective option is to connect one or more servers to a data switch via 10G Ethernet, then have individual editors connect to that switch via 1 Gb Ethernet. This configuration supports up to 10 editors getting data at close to the maximum 100 MB/second speed of 1 Gb Ethernet, which is enough for most HD productions.

The fewer users on the network, the faster data transfer speeds will be, up to the maximum speed of the communications protocol.

There are also benefits to connecting key users to shared storage via 10G Ethernet to take advantage of the extra speed. To do so, however, requires servers, switches, cables and computer interfaces that support both 10G Ethernet and jumbo frames.

GET STARTED

For many of us, the ability to quickly share files within a workgroup is an equal priority to performance. In fact, we are often willing to trade off raw speed for the flexibility of file sharing. Sharing requires a server connected to each computer via Ethernet.

Ethernet was first “created in 1973 by a team at Xerox Corporation’s Palo Alto Research Center (Xerox PARC) in California. The team, led by American electrical engineer Robert Metcalfe, sought to create a technology that could connect many computers over long distances. Metcalfe later forged an alliance between Xerox, Digital Equipment Corporation, and Intel Corporation, creating a 10-megabit-per-second (Mbps) standard, which was ratified by the Institute of Electrical and Electronics Engineers (IEEE).” (Britannica.com)

“Ethernet networks have grown larger, faster, and more diverse since the standard first came about. Ethernet now has four standard speeds: 10 Mbps (10 Base-T), 100 Mbps (Fast Ethernet), 1,000 Mbps (Gigabit Ethernet), and 10,000 Mbps (10-Gigabit Ethernet). Each new standard does not make the older ones obsolete, however. An Ethernet controller runs at the speed of the slowest connected device, which is helpful when mixing old and new technology on the same network.” (Britannica.com)

KEY POINTS

In order to share files, we need to use a server. To connect multiple computers to the server we need to use a switch. Switches can handle multiple users connecting at different speeds. If we want a user to connect at the fastest Ethernet speed commonly available today, they need to use 10G Ethernet. This either requires a computer with a built-in 10G Ethernet port or getting an adapter that converts Thunderbolt to 10G Ethernet. In this article, I’m using an adapter.

As well, to enable high-speed data sharing between users and the server, we need a switch. While it is possible to connect one user directly to the server, it is FAR cheaper to just use direct-connect storage instead. So, to properly harness the speed of 10G, you’ll need a:

DEFINITIONS

THE PIPE VS. THE DATA

There are four factors that determine the speed of your network:

Ethernet, like FireWire, SCSI, USB and Thunderbolt is a protocol, a pipe, that carries data from one place to another. Each of these pipes has a maximum speed that it can support. However, the actual speed of the data inside the pipe is dependent upon both the maximum size of the pipe and the data transfer speeds of the devices attached to it.

For example, a spinning hard disk today transfers data around 200 MB/s. However, if you connect that hard disk to your computer using USB 2, you’ll only get about 25 MB/s, because that is the speed limit of USB 2. Connect the same hard drive via FireWire 800 and data transfer increases to around 80 MB/s, again due to the speed limit of FireWire. Gigabit Ethernet maxes out, as we will see shortly, around 110 MB/sec.

In all these cases, the limiting factor on performance is the size of the pipe that carries the data.

NOTE: The theoretical maximum speed for 1 Gb Ethernet is 128 MB/s. However, general system overhead reduces that to 100 – 110 MB/s. The theoretical speed of 10G Ethernet is 1,280 MB/sec. Practically, this will be closer to 1,100 MB/sec, depending upon the storage devices inside the server.

With Thunderbolt and 10Gps Ethernet, the pipe is finally larger than any single device connected to it. So, measuring performance now requires an understanding of the speed of the storage device as well as the size of the pipe.

NOTE: In the case of servers, speed also depends upon the number of users who are accessing that server at the same time. The more users, the slower the data transfer rate for each user.

As I also mentioned, the speed of the different devices on your network is a contributing factor to its overall speed. Here’s an article that compares the speed of internal, external and shared storage.

MORE DEFINITIONS

NOTE: If you want to connect multiple devices so that you can share files, you need to use a switch. Some wireless networks act as switches allowing different computers to send files to printers, for example, but, most of the time, physical switches are at the heart of any network.

The way ALL networks work is that an end-user connects to a switch which then distributes data requests to various servers, printers, the internet or other connected devices.

When you need to pull data from the server, the request goes from your computer to the switch to the server, then the data comes back. Additionally, because servers are shared between multiple users, the more users accessing the server at the same time, the slower the data transfer. This is true for all shared storage.

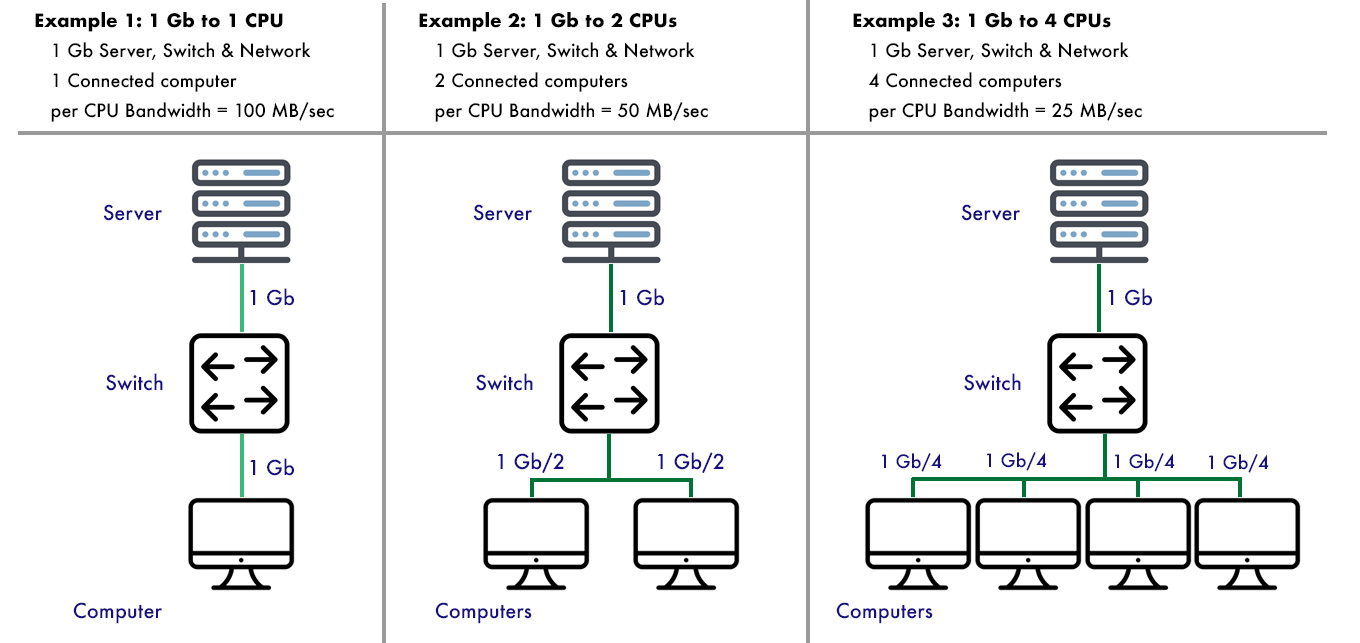

Here’s are some examples:

(Click to see a larger image.)

Example 1 illustrates one user connected to a 1 Gb switch via 1 Gb Ethernet. The server is also attached to the switch via 1 Gb Ethernet. When a single user requests data, the switch, server and computer all transfer data at the full 1 Gb speed.

Example 2 adds a second user, also making a request for data via 1 Gb Ethernet. Now, both users talk to the switch at 1 Gb speeds, the switch feeds both requests to the server, but the server can only transfer data at a maximum of 1 Gb, because that’s the speed of its connection. This means each user only gets their data a 1/2 Gb speed, or, roughly, 50 MB/s, because half the server output is going to user 1, while the other half is going to user 2. The speed of the server’s connection to the switch becomes a gating factor for speed.

NOTE: This example assumes each user is asking for a large file and both are accessing the server at the same time.

Example 3 increases this access to four users. Now, the connection between the server and the switch becomes a real bottleneck, because each user now only gets data at 1/4 Gb speed, or, roughly, 25 MB/sec. Obviously, adding more users to the same server makes any kind of media editing very difficult as the data transfer rate changes constantly.

NOTE: These are idealized examples, but you get the picture; the more users accessing the server at the same time, the less server bandwidth that’s available to each user.

The easiest way to fix this speed bottleneck is to provide a faster connection between the server and the switch. This can be done with port aggregation (another long article) or, related to this article, connecting the server to the switch via 10G Ethernet; provided the switch supports it. Some do, most don’t.

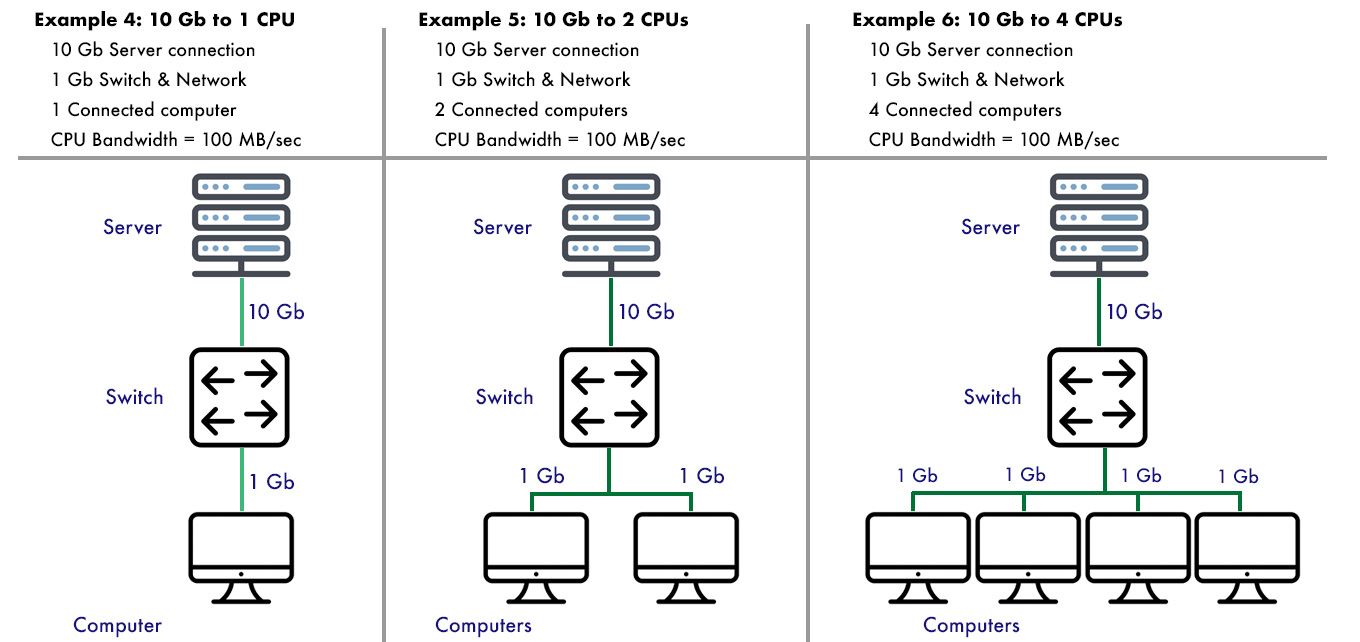

(Click to see a larger image.)

Examples 4 – 6 illustrate the speed differences to the individual user by having a faster connection between the server and the switch. In this example, even with four users, every user is getting its full bandwidth of media. Why? Because the server can feed data to the switch faster than the combined data needs from all four users (10 Gb vs. 4 Gb).

The point of these examples is to illustrate that there are many ways of leveraging the speed of 10G Ethernet. At a minimum, you need a high-speed connection between your server and the switch.

In an ideal world, we would get the best performance by having all switches, servers and computers support 10G Ethernet. To achieve this, would require:

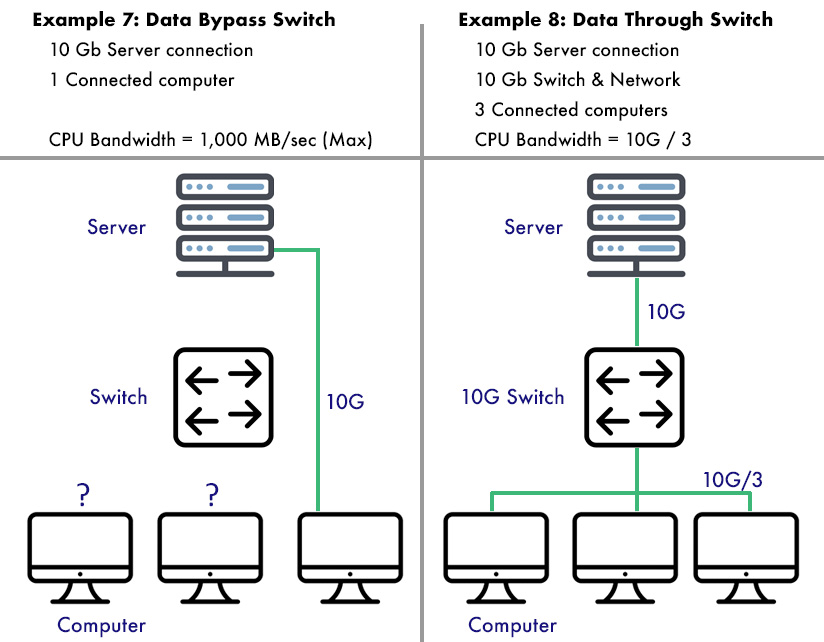

(Click to see a larger image.)

UPDATE: Originally, I tried connecting one computer directly to the switch. It worked… but all the other computers on the network received no benefit. Worse, I couldn’t connect the 10G computer to the Internet. What I had done was spend a ton of money to buy a server, only to use it as direct-connect storage; with no Internet access. Sheesh…

So, I bought a NetGear XS505M switch. This has four 10G ports on it. One went to the server, one to my existing 1G switch, which covered all my slower devices, leaving two 10G ports for the two computers I needed to have high-speed access to the server. Because I only had a few devices that needed high-speed, I didn’t need a 10G switch with lots of ports. The good news is that the performance of one computer through the switch, for example, representing a media editor, easily came close to maxing out the 10G pipe, while other users could still transfer files at close to 1G max speeds.

NOTE: Cables also make a difference. The high-speed of 10G Ethernet requires cables specially constructed to support it. Those are rated as Cat 6A or 7. Lower-rated cables can carry data, but not at the full 10G speed. 10G Ethernet also requires all cable runs to be less than 100 meters.

I was able to successfully use Cat 5e cables for short distances at full 10G speeds, but if I were wiring an office, I’d use Cat 6A.

THE SYSTEM

(Apple 2018 Mac mini)

For the purpose of this tutorial, I’m connecting a Mac Mini (2018) to a Synology DS 1517+ server. The Synology has five drives, configured as a RAID 5. If that RAID were directly attached, I would expect at least 600 MB/sec in read/write speeds. I’m using the internal 1 Gb Ethernet port on the Mac mini to measure baseline speeds.

Because the Mac mini does not have a built-in 10G port, I need to add some extra gear to jump to 10G speeds.

EXISTING HARDWARE

NEW STUFF

(Synology DS 1517+ 5-bay server)

NOTE: The Synology SNV3500-400G SSD cache is not needed for 10G Ethernet, but, as I wrote in this article, the addition of the SSD card made a big difference in the responsiveness of the server; especially in displaying directories and initial access.

(NetGear ProSafe XS505M 4-port switch)

UPDATE: When I first wrote this article, I thought I could make this system work without a switch. I couldn’t. The NetGear is an unmanaged switch, which means you just plug stuff into it and it works. Designed for small networks, it support jumbo frames up to 9K, and all Ethernet speeds that I need: 100M, 1G, 2.5G, 5G and 10G. Plug it in and it self-configures.

My existing switch is an 18-port Cisco SG-200 18 which has been rock-solid for years. With the NetGear, the Cisco plugs into Port 1, the Synology into Port 4 and my two high-speed Macs go into Ports 2 & 3. So far, everything is working great.

SIDEBAR: OWC THUNDERBOLT 3 10G ETHERNET ADAPTER

(OWC Thunderbolt 3 10G Ethernet Adapter)

Just a quick word on the OWC Thunderbolt 3 10G Internet Adapter. Using it could not be easier: connect it to a Thunderbolt 3 port on your computer, then plug the Ethernet cable into the other end.

The unit instantly self-configures for: 100 Mbps Ethernet, as well as 1 / 2.5 / 5 / and 10G Ethernet. It could not be easier to use. About the size of two decks of cards stacked on top of each other, it weighs a pound (16 oz / 453 grams) and, when operating runs warm to hot.

It is dead quiet and just works. Plug it in, then worry about something else.

NOTE: Here’s my review of the OWC Thunderbolt 3 10G Ethernet Adapter.

PREPPING THE HARDWARE

PREPPING THE HARDWARE

To get the hardware ready:

NOTE: The Synology adapter was easy to install and worked without any hardware hassles. The server software, though, needs to be configured to work with the adapter, which I cover below.

SOFTWARE CONFIGURATION

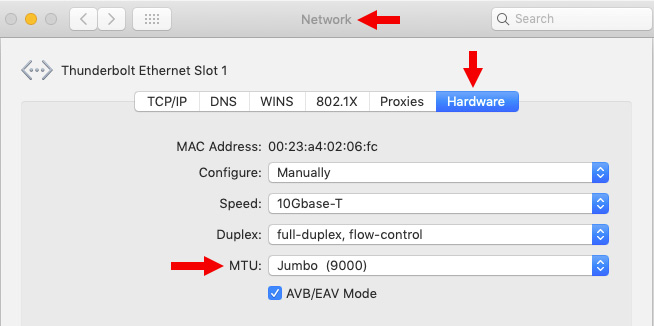

Ethernet sends data in packets, called a “frame.” A normal Ethernet frame holds 64 bytes of data. For 1 Gb Ethernet or slower, this is fine. But as speeds get faster, performance improves as the size of the frame increases. This new, bigger frame is called a “jumbo” frame and is 9,000 bytes in size.

So, to configure a Mac for this faster, 10G connection, here’s what we are about to do:

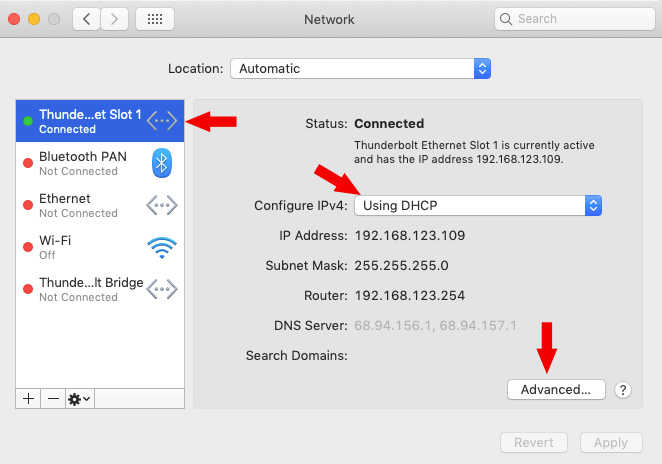

ON THE MAC

NOTE: If you have a built-in 10G Ethernet connection on your Mac, select it, then skip to the second bullet.

NOTE: The largest a jumbo frame can be is 9,000 bytes. You can make it smaller, but there’s no benefit to doing so in most cases.

UPDATE – HIGH-SPEED PLUS THE INTERNET!

UPDATE: Originally, I showed how to connect to the web using two Ethernet ports, which will work, but I don’t advise it. By adding a 10G switch – even a small one – the additional hassle of double-cabling and using two separate Ethernet ports – is avoided.

NOTE: Internet connections are far, far, FAR below 1 Gb Ethernet speeds so using a slower port won’t make any difference in your Internet connection.

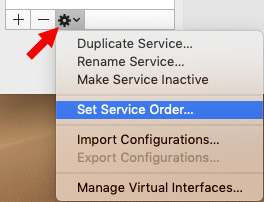

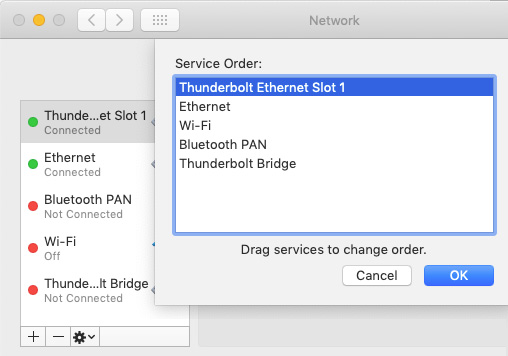

After configuring the 10G connection, click the Gear icon and select Set Service Order. A Mac always attempts to connect to the highest listed service in this sidebar. If that fails, it jumps down to the second, and so on.

In the Service Order window, drag the name of the 10G connection to the top, then make sure the built-in Ethernet port is listed second.

Once the OWC device is connected to the switch, the second Ethernet port is no longer needed and can be turned off.

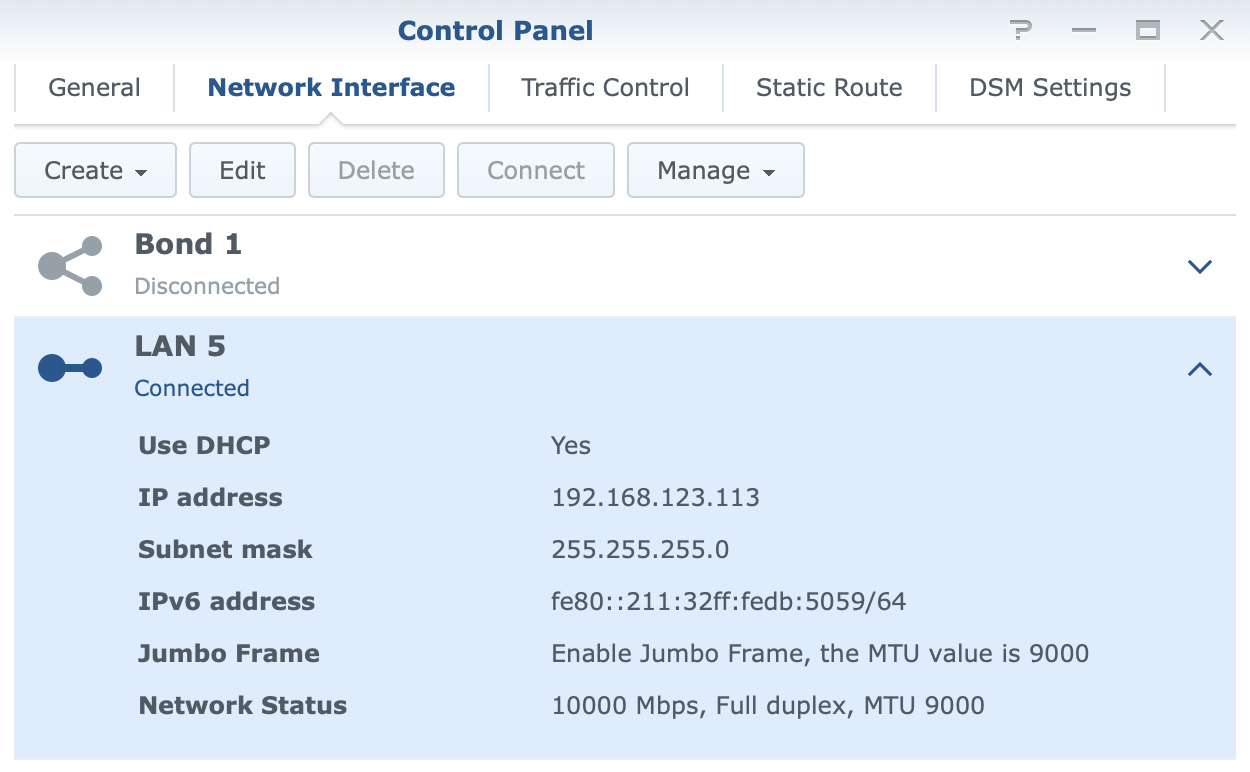

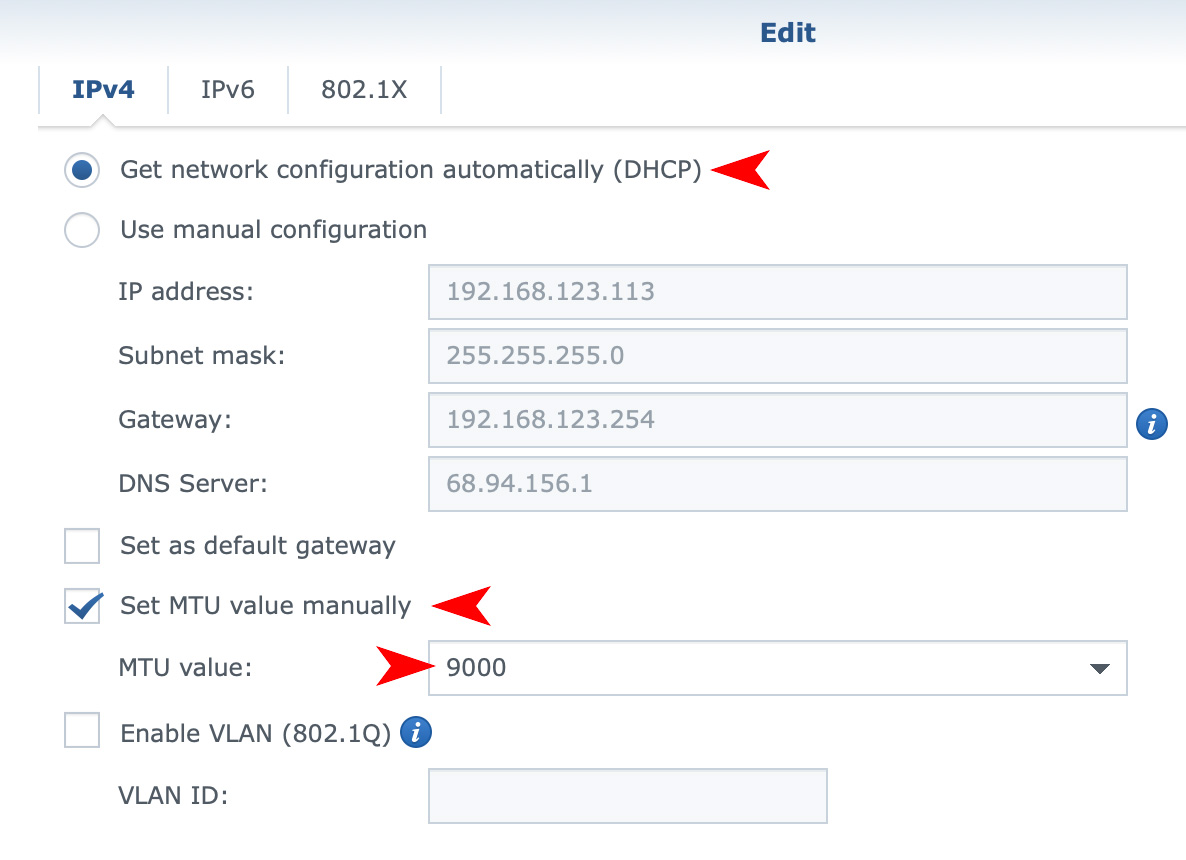

CONFIGURE THE SYNOLOGY SERVER

What needs to be done on the server is enable the new 10G port and disable the old one. As long as you are going through a switch, the configuration is easy. Without the switch, it is a pain in the neck.

NOTE: The specific menus and screens will vary depending upon the server you are using. In my example, I’m using the Synology operating system.

NOTE: Resetting the MTU value requires a restart of network services. Also, jumbo frames must be turned on for all devices that connect to this port. Since the switch is unmanaged, there’s nothing to turn on, jumbo frames are supported automatically.

Finally, with the server attached to the 10G switch, disable all the 1G ports on the server to avoid an IP conflict. This was something I wrestled with for a couple of days until I finally realized that you can’t have two different ports into the same server.

Once I realized a 10G switch was essential, the entire connection and configuration process took 30 minutes. Nothing like learning from your mistakes.

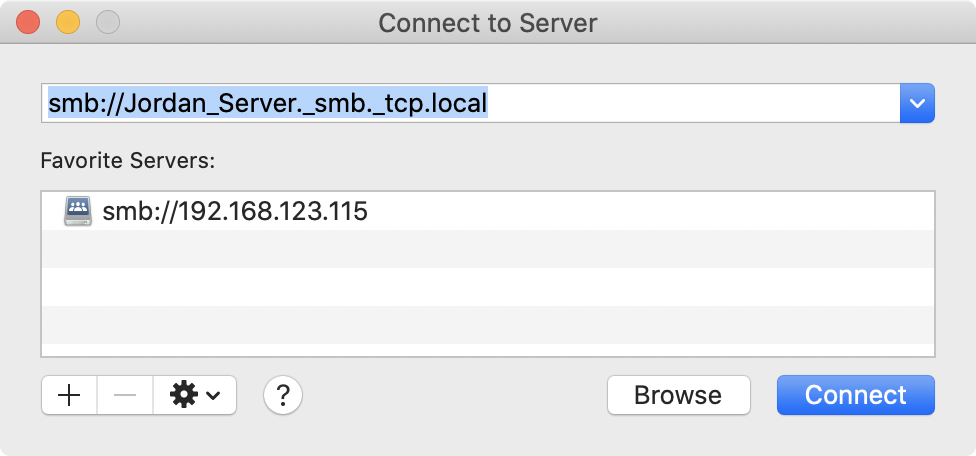

(Browse for servers using the server name, not the IP address.)

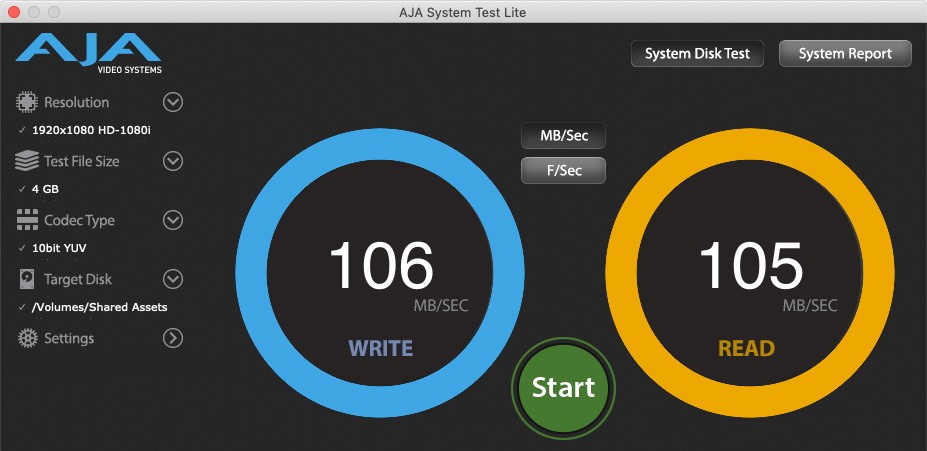

PERFORMANCE TESTING

This section is the reason for all our work: Just how fast is all this new gear? Well, to set some initial expectations, here’s what I expect will happen:

As you’ll discover, some of my expectations were correct, but others weren’t.

1 Gb ETHERNET BASELINE

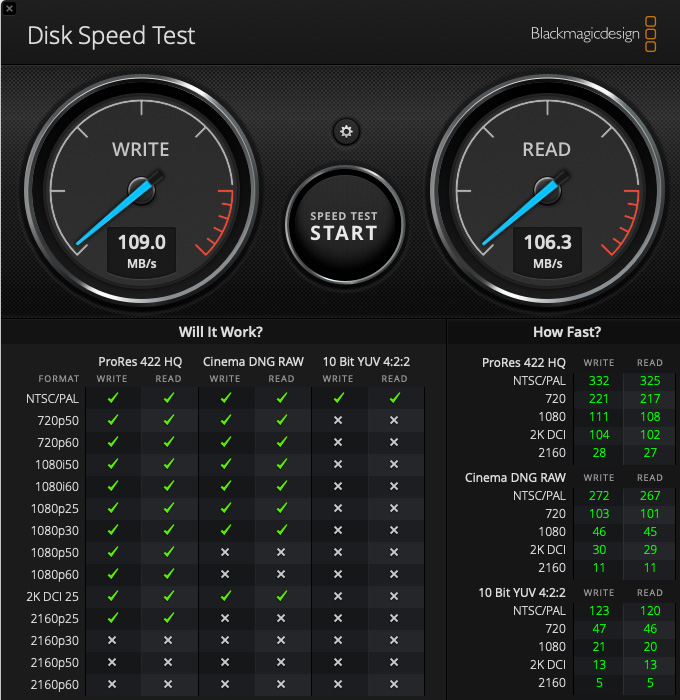

(The baseline speed of 1 Gb Ethernet. Tests done using AJA System Test Lite.)

Here’s the baseline speed with the Mac mini connected using its native 1 Gb Ethernet port through the switch to the server via 1 Gb Ethernet. This is right in-line with the typical speed 1 Gb Ethernet can provide.

(Test results using Blackmagic Disk Speed Test.)

As you can see, 1 Gb speed is fast enough for some media formats up to 1080p. But larger codecs, including HDR media, require more speed. This is also a good example of the efficiency of ProRes for video editing.

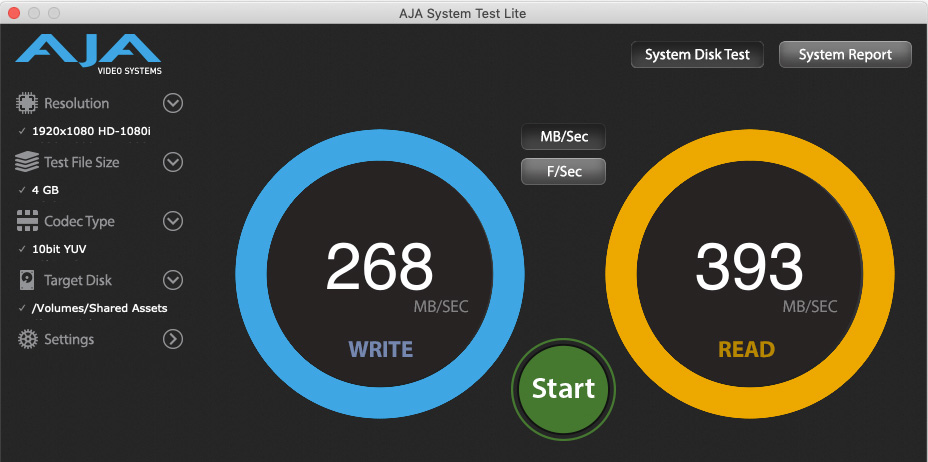

(10G Ethernet speed using the default Mac settings; jumbo frames are turned off.)

Changing the connection to 10G Ethernet but NOT changing the default jumbo frame settings roughly tripled the speed. In other words, if you just connect the OWC unit and keep all the defaults, this is the speed you will get.

NOTE: This was with a single Mac connected through the switch to the server.

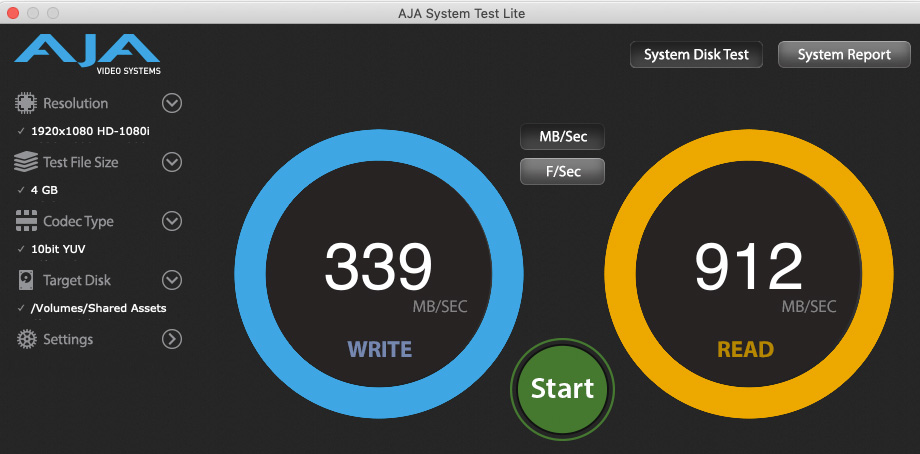

(10G Ethernet speed with jumbo frames enabled and set to 9000.)

But, enabling jumbo frame settings REALLY boosted read speeds, while write speeds were triple that of 1 Gb Ethernet. This is far faster than I was expecting the server to deliver data!

NOTE: Again, this was measured with one computer accessing the server to determine maximum speed.

For video editing, slower write speeds are OK, because rendering and exporting are based upon how fast the CPU can calculate each frame. In general, I’ve found write speeds during export to range between 50-80 MB/second. So slower write speeds are not a big concern in terms of overall editing performance.

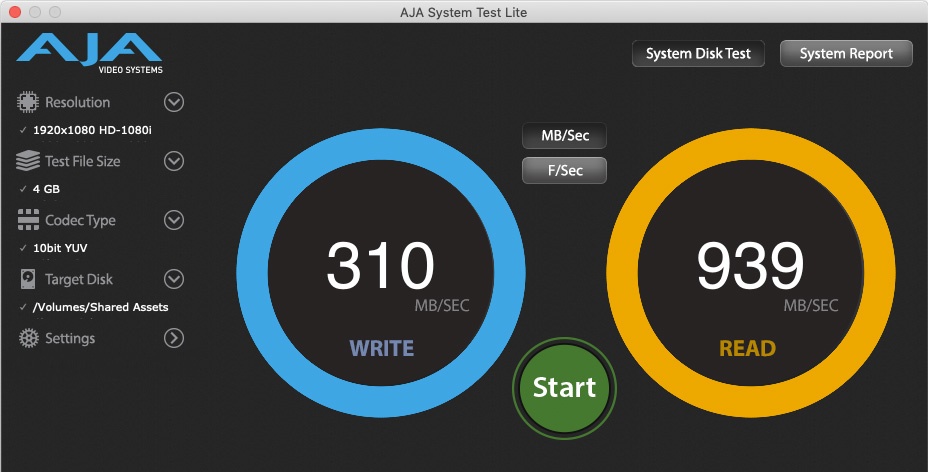

(Two computers accessing the server at the same time. Top: 10G connection. Bottom: 1 Gb. Both computers connected via a 10G switch. Click to see a larger image.)

When two computers access the server at the same time, 10G speeds slow dramatically, though 1 GB speeds remain about as fast as ever. The top image is the 10G connection, the bottom image is the 1 Gb connection from a second computer running at the same time.

NOTE: I noticed that when both computers were connected to the same shared volume, 10G speeds were 50% compared to when each computer was connected to separate server volumes. That was something I did not expect. That changes the way I think of organizing volumes.

The main reason for the slowdown, as we see here, is the wide variation in access speed caused by the drives inside the RAID hustling to read and write data for both systems accessing the server at the same time. In other words, the drives can’t devote themselves to fulfilling just one data request at a time.

Keep in mind that not all computers access the server at the same time. This means that overall throughput will vary with connection activity.

NOTE: This would not be an issue using a server containing SSDs, as SSDs contain no moving parts and would respond more quickly to all requests. However, SSDs don’t hold nearly as much as HDDs.

(Data transfer rates using Cat5e cable. For short runs, this equals the speed of Cat6a cable.)

One VERY interesting thing I learned was when I replaced the high-speed Cat6A cable with Cat5e cable. Theoretically, Cat5e should show more errors and a slower speed. What I discovered, though, was that it delivered the same throughput during the test as the Cat6A cable.

The lesson here is that, while it is always a good idea to use cable rated for the speed you need, in a pinch, you could use Cat5e cable without significant degradation; at least for short distances and the short term. I still plan to use Cat6A cable – just to be safe.

BIG NOTE: Both cable runs were 50 feet in length. 10G speeds require cable runs shorter than 100 meters. 1 Gb supports cable runs up to 300 meters. Longer cable runs decrease transfer speed.

SUMMARY

I learned a lot doing this exercise. First, I’m going to keep all my 10G gear and continue to look for ways to optimize my network speeds. When transferring hundreds of gigabytes, the faster speed will make a big difference.

However, I also learned that 10G Ethernet is not a panacea. It is a tool, which has benefits, but you also need to understand the limitations. Unlike direct-connected storage, the performance of shared storage over Ethernet will vary depending upon a number of factors. Still, I’m looking forward to taking as much advantage of this new speed as I can.

2,000 Video Training Titles

Edit smarter with Larry Jordan. Available in our store.

Access over 2,000 on-demand video editing courses. Become a member of our Video Training Library today!

Subscribe to Larry's FREE weekly newsletter and

save 10%

on your first purchase.

13 Responses to Does 10 Gb Ethernet Make Sense for Video Editing? [u]

Regarding Cat5e, if you check the actual bandwidth rating of your Cat5e (usually printed on the cable) you will likely find that its spec matches or exceeds Cat6. The Cat5e spec is 100Mhz but most Cat5e is actually rated higher, as high as 350Mhz. Cat6 spec is 300Mhz. There are other minor differences and Cat6 is often over spec too, but, yes, you can definitely use Cat5e in a pinch.

Tod:

This is VERY cool to know. I shall have to read my “cable lables” more carefully.

Thanks for the update.

Larry

excellent article Larry, how much redundant gear is left over with all this now? 🙂

Norm:

One 8 TB hard disk that I’m keeping as a spare. It’s not that I have redundant gear, but that I needed to buy as much gear as I did.

Larry

Thank You so much for such excellent and detailed information which provides the wide view as well as the close up shot of what why and how to set this up. This fits right into the new, real world, and necessary solutions we are being pushed to learn during these auspicious times we are facing with our lives and our work …not to mention our health. (Similar articles usually focus on only part of the story and we are left to try and piece together the rest. ) Your own experience and brand/model/item recommendations are priceless and once again we Thank You for the sharing of your excellent work with all.

Kimu:

Thanks for the kind words. This was harder to do than I thought. I’m glad you liked my work.

Larry

This is a fantastic article Larry. It’s so helpful to have someone go through all these steps in a detailed & systematic way showing the benefits of each new step. It’s really gonna help in my own network changes & upgrades. Currently using 1gb switch with cat5e cables but with a QNAP server capable of 2.5gb x2.

Thank you.

Larry, thank you for such an excellent and detailed review and guide. I think one possibility you could explore for the future is expanding your array of spinning disks and using RAID 10 so that you have higher performance than is possible with RAID 5, especially when you have many more disks, like with an eight- or ten-volume Synology cabinet. Ten spinning disks in such an array, even with a 50% waste for the mirroring, should still be cheaper than SSDs for similar capacity but could completely saturate a 10 gig link even with multiple computers… Another option would be warm and cold tiers where you put SSDs into the five bays of your current cabinet and then back those up for non-current projects to higher capacity spinning disks either via USB or a secondary 1G ethernet cabinet from the Synology value series. The hot tier, for solo editing, could be a thunderbolt SSD like a Samsung X5 (which got a nice recent price reduction on the higher capacity unit) connected to one of those four thunderbolt ports your Mac mini is blessed with! I’m still amazed that the new generation of M1 Macs only have two ports.

Steve:

Thanks for your comments. Given the same number of drives, RAID 10 would be slower than RAID 5. RAID 5 costs the performance of one drive, while RAID 10 costs the performance of half your drives. And to fully saturate 10 Gb Ethernet would take 1 NVMe SSD, 3 PCIe SSDs or 6 spinning hard disks.

The idea of hot (very fast) vs. cold (slower) tiers is worth considering.

As for M1 Macs, remember, these were for the low-end systems; designed to test out the new silicon as much as provide new computers. I have EVERY expectation that more performance – and more ports – are coming.

Larry

Switches do NOT divide the bandwidth.. Each device can max up to 1g on a 1g switch and up to 10g on a 10g switch..

Also, simple option is to just connect 10g switch to router -> both PCs + NAS

Would have been nice to see speeds of SSDs compared in 10g (what I was looking for originally when I found this)

Wut:

You are correct, but you misunderstood. Switching don’t divide bandwidth, that is true.

However, if I have a server connected to a switch using a 1 Gb connection, that 1 Gb of bandwidth from the server needs to be shared (divided) across all devices that are accessing the server at that instant. This isn’t a limitation of the switch, but a limitation of how the server is connected.

You are also correct in that connecting a server via a 10 Gb connection is preferred because the total bandwidth available to the server is now ten times faster. But, not all switches, especially lower-cost switches, support 10 Gb server connections.

Larry

P.S. If I had SSDs, I would happily have compared them, but I don’t… yet.

Great work, you got .939 gigabytes per second from a 1.25 gigabyte per second link (10Gbe). There’s a bit more performance available from the ethernet connection. Don’t know about Synology but I run TrueNAS Scale on a small server with 10G via Intel x520 nic and a 4 port 10Gbe switch similar to yours. Transfers often exceed 1 gigabyte per second. It’s roughly the same performance as an m.2 SSD from 5 years ago. This is crucial in the 4K era. I require a centralized content library thats always online and shared to 2 macs + 1 pc via 10Gbe, and via wifi6 at speeds over 1Gb/s.

Val:

Thanks for your comments. Ethernet does not always deliver top speeds because it doesn’t support QoS. However, 10G provides more options for faster media delivery, provided your network is optimized for it.

Larry